In this post, I'll cover the migration of this web site from a

long history of local hosting to Widows Azure Web Sites.

I've had my own personal domains (GroupLynx.com followed by

irritatedvowel.com followed by 10rem.net) since the mid 90s. Early

on, I used regular hosts. I've run my personal website out of a

succession of servers in my basement for at least a decade. It used

to be serious geek cred to be running all your own servers. Here's

a photo of my site's hardware in 2003:

Back in 2007 I dumped the old desktops I was using and converted

to a couple IBM 345 Xeon-based eServers in a big old APC rack. One

server was for database, the other for the website. In addition, I

had my own email server and domain controller at the time using old

PCs housed in rack cases.

(Left photo shows top of rack, right photo shows bottom of same

rack. Not shown: the ethernet switch near the top)

With these wonderful servers came increased cooling needs. That

meant putting a window shaker in the utility room. Not only is this

ugly, but pretty sure it was costing me a fair bit over time, even

at the relatively high setting I kept it on. Purchasing a

refrigerated rack wasn't an option. Using a purpose-built unit with

a blower on front also wasn't an option as they just cost too much

to purchase and ship.

Being your own IT guy leaves you with no one to yell at

Being your own IT guy is a pretty thankless job. I've had RAID

drives go defunct, servers die, and the power to the whole house

drop at least twice a year. Each time that happened, my site had

downtime. Sometimes, especially in the case of the power outages,

it was always a question as to whether or not the servers would all

come back online properly, or if I'd find myself scrambling to fix

things.

Also, all this equipment in the utility room made a lot of

noise. That noise was picked up by the uninsulated ducting and

broadcast throughout the house. You could hear the servers if you

were near any of the central heating/cooling ducts on a quiet

night.

Last summer, during the derecho storm and related power outage,

my database server lost another drive. The RAID configuration

couldn't handle that because, as it turns out, one of the other

drives had been bad for a while, and I didn't realize it. That

meant that the entire second logical drive (data drive) was gone.

Luckily, I had a backup of the site data, but I had no spare

drives. So I cleaned up the OS drive and freed up enough room to

store the database there.

I investigated hosts for quite a long time. Even though my

blogging has slowed down with my newer role at Microsoft, my site

still gets enough traffic to put it outside the bounds for most

inexpensive hosts. I looked at Azure at the time and at the Azure

Web Sites preview. However, it had a number of issues at the time

which prevented me from moving to it (not the least of which was

you couldn't have a domain name that began with a number - a simple

regex validation bug that stopped me cold).

So I hung it back up for a while. In the meantime, the site has

been down a number of times due to power outages. I did migrate

email off-site, which is good, as that server eventually died, and

the blacklisting associated with not being a proper host got to be

a bit too much. Oh, and I realized I was starting to be used as an

open relay (which despite all the work I did in patches etc, kept

happening).

Moving to Azure

Earlier this year, I made up my mind to move to Azure Web Sites

during my first vacation. Right after Build, I took two weeks off,

and used a small amount of that for the migration. My wife and kids

were up at my mother-in-law's so the house was quiet and I could

really concentrate on the move.

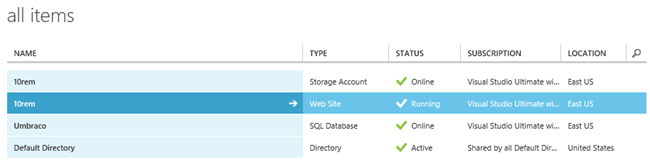

This site has been running on Windows Azure since the

early morning hours of July 9, 2013. The timing was

perfect, as I had yet another power outage, this one killing the

domain server. No, I didn't have a backup domain server. That made

some server tasks…challenging, but not impossible.

When moving to Azure there were several steps:

- Create the Azure account

- Create a Windows Azure Website instance

- Create a Database server (if required)

- Copy the site files

- Migrate data

- Map domain names

My site runs an old version of Umbraco that I have customized to

better support my blog. There's a fair bit of custom code in there,

so migrating to a newer version would be a chore. I decided for

this move to just bring the whole thing over wholesale. Using an

FTP client, I was able to move the site files over quite easily. In

fact, there's almost nothing interesting about that process. Azure

gives you the FTP address, and you just dump files to it. Simple as

that.

The database was a bit more interesting.

Because I use an external database server, and not just

something simple like vistadb, I had to export data to the Azure

database. If you use a filesystem-based database like vistadb with

your install, this whole process becomes much simpler. I tried many

different ways of getting the data over. Of all the things, this

was the most time consuming. (Note that I'm using a SQL Database

Business edition. Web would work fine, and you can switch between

them as necessary. Currently, they both have the same pricing and

features, as I understand it.)

It's so simple though? Why was it time consuming?

Exporting the BACPAC

The reason was I was running a bare SQL Server 2008 install (no

SP, no R2) and using those client tools. What I didn't realize

until I spoke with another person on the team, is that I needed to

use the SQL Server 2012 client tools and then the migration would

be dead simple. Let me save you that time: if you want to

move a SQL database to Azure, regardless of what version, grab the

SQL Server 2012 client tools.

http://www.microsoft.com/en-us/download/details.aspx?id=35579

Download the full installer (SQLEXPRWT_x64_ENU.exe), not the one

that is oddly named SQLManagementStudio. Because, well, that

package doesn't include the management studio. You want

SQLEXPR_x64_ENU.exe (or the 32 bit version if you are on a 32 bit

OS).

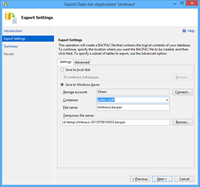

You'll also need to create and configure a windows azure storage

account and a container (go into configure page and then choose

"manage access keys" to get the account key). This is where the

export package will reside.

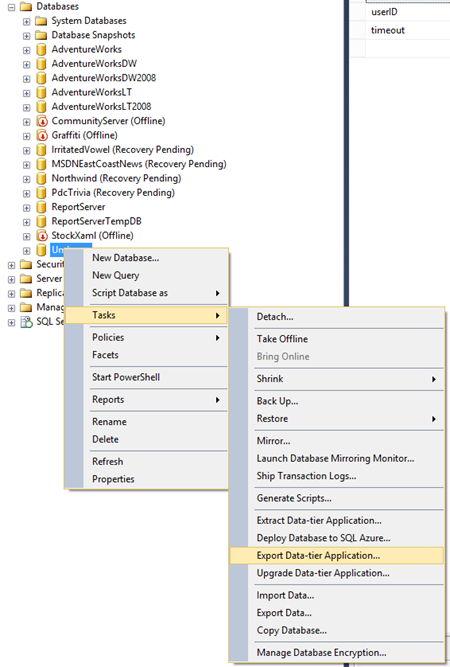

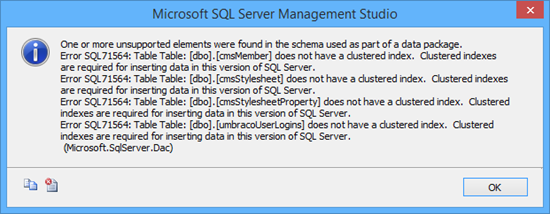

Once I had the right product, I was able to export what is known

as a "bacpac" file. This is not the same as a "dacpac", and is an

option only available in the 2012 client tools. You'll need to

export (not extract) the BACPAC from the database using the client

tools. This is right on the context menu for the database.

This will then bring you through a wizard. There aren't that

many options. Note that you can have the bacpac uploaded to Azure

automatically. This is the option I recommend you choose.

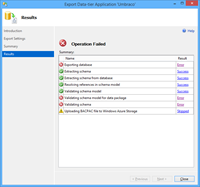

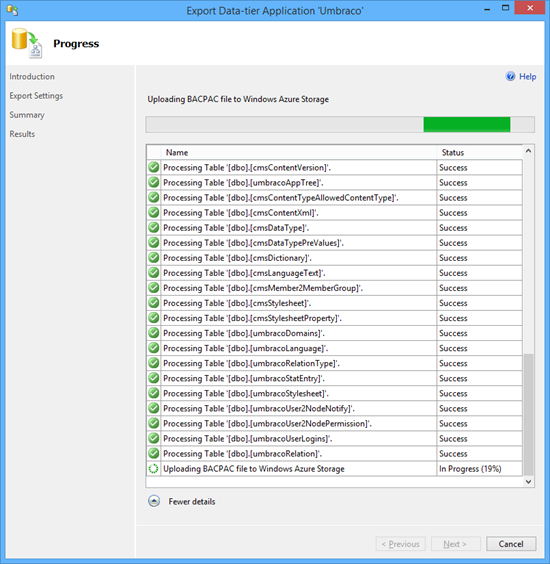

Because of my old SQL install and the newer standards, I was

missing clustered indexes in the Umbraco database. The export

failed because of that. Stuff like this must drive DBAs mad.

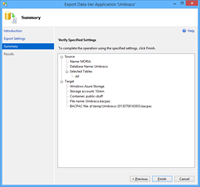

Once I went in and created clustered indexes for all tables, the

export completed without a hitch.

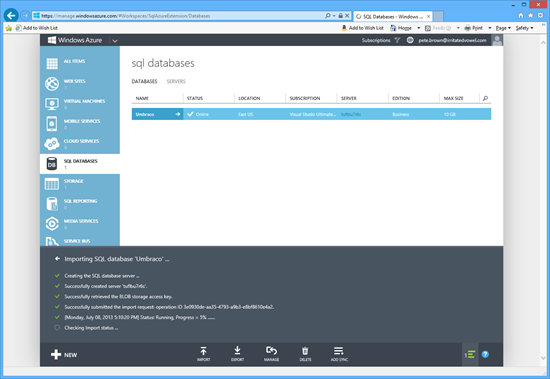

Then, I went into Azure and chose the option to import the

database from the bacpac file. I had created a database prior to

this, but decided to simply delete it and let the import create

everything for me from scratch. After about 10 minutes of Azure

showing me 5% complete (which means you're queued, as I was told),

the import completed and the database was online.

Painless! At that point, you could delete the bacpac if you're

comfortable the database is up and running. I verified the data

using the 2012 client tools - they could connect to Azure as easily

as any other networked database.

The domain

The next step was to map the domain over. I had to do this

because my build of Umbraco 4 includes redirect code which sends

you to the main URL for the site regardless of what you type in.

That makes testing on a non-production URL impossible. I don't

recall if this is something I added to make sure I could use a

naked domain like or if it was something intrinsic in Umbraco

4.

Now, if you're smart, you'll do all this before you even start

with the database import. Why? Because DNS takes time to

propagate.

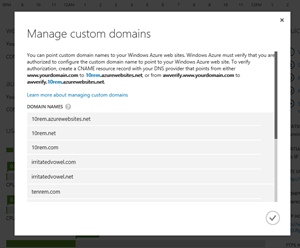

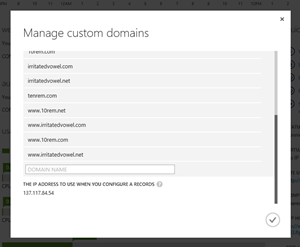

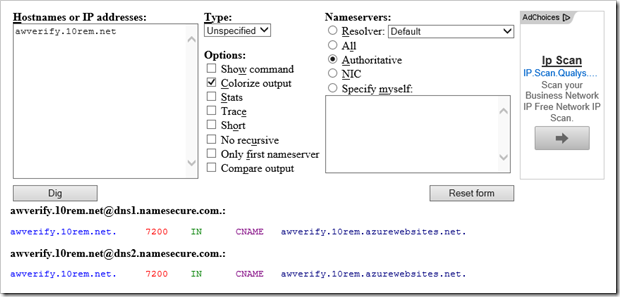

Before you can map domains, Windows Azure requires that you map

some CNAMES for verification purposes, for each unique

domain, as shown in the above screenshot. You can map the

primary, or use some purpose-named subdomains. Azure is specific

about this, so make sure you have the names mapped exactly as

requested. For example, here's my main 10rem.net zone file with the

Azure-required domains still mapped:

MX Records:

A Records:

10rem.net. IN A 137.117.84.54

www.10rem.net. IN A 137.117.84.54

CNAME Records:

awverify.10rem.net. IN CNAME awverify.10rem.azurewebsites.net.

awverify.www.10rem.net. IN CNAME awverify.10rem.azurewebsites.net.

TXT Records:

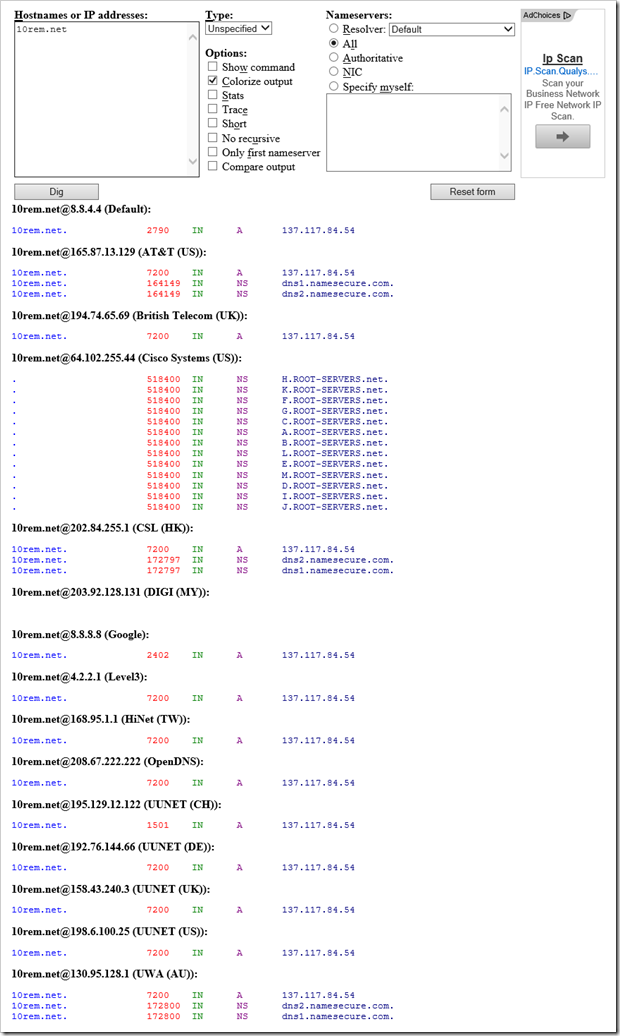

You can't map your domain in Windows Azure until those cnames

become visible to Azure. One way to track that is to use this web

site:

http://www.digwebinterface.com/

Using this site, you can see the state of propagation for your

DNS entries. It's quite useful. Simply pick "all" and enter the

host names. Here's what it finds for my main domain:

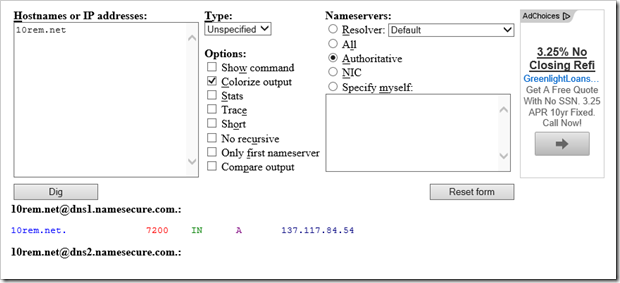

If you want to just check the authoritative one for your domain,

select that from the options:

It's quite useful to monitor progress as you don't want to wait

two or more hours to find out you messed something up.

Here's the awverify verification:

Note the CNAME entry - that's what Azure requires.

Azure in Production

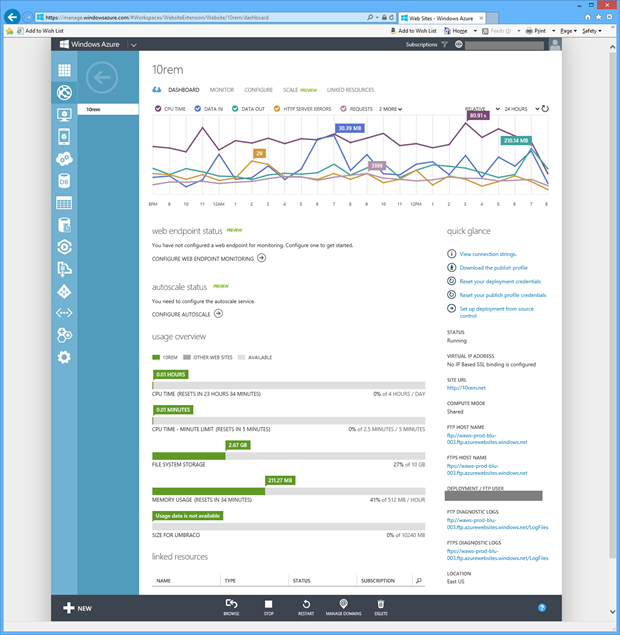

My Azure Web Site is running in Shared mode, with .NET Framework

4.5, 64 bit with no other special settings.

So far, with just this small shared instance, everything has

been running very smoothly. I'll continue to monitor in case it

makes sense to make more capacity available in the future. I can

see adding perhaps one more instance, but so far, it's quite happy

as it stands today. My CPU time stays low. Memory usage is the

highest number, at about 50% capacity on average.

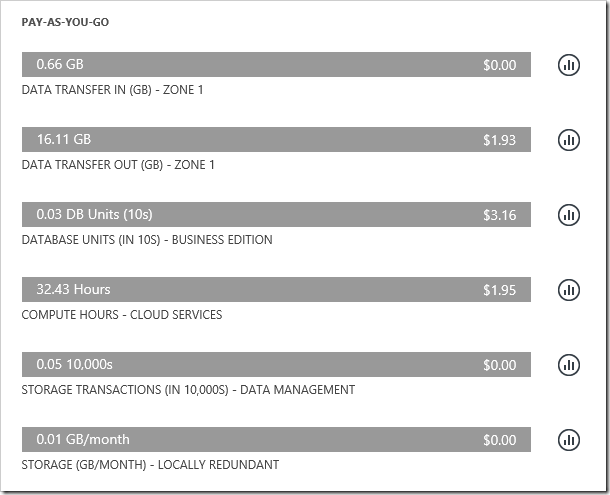

How's the cost? It's really very reasonable. Here's the cost so

far, from late 7/8 through today

Yes, that's a whopping $7.04, or around a dollar a day. I've

probably saved more than that in electricity just by killing the

server rack and the associated AC unit.

I'm at about half the site visits I had in 2011. Once I pick up

blogging at a pace like I used to, I would expect to see that go

up. However, even at double or triple the cost, that's still a good

deal considering I have a website with 3GB in files and several

hundred MBs of database.

Conclusion

So far, this experiment with Windows Azure Web Sites is going

quite well. Once I had the right tools, getting the database over

was simple. Copying the files over was a no-brainer, and even

though my Umbraco website ran under an older version of .NET

locally, it worked just fine when moved to the server.

My site traffic is not much different from some small businesses

I've seen. For those, especially ones which have seasonal traffic

patterns, going with something like WAWS makes perfect sense.