Ok, that's it. I've had it. I want my pixels,

damn-it!

For a while, screen resolution has been going up on our desktop

displays. The trend was good, as I've always wanted the largest

monitor with the highest DPI that I could afford. I mean, I used to

have one of the first hulking 17" CRTs on my desk. I later upgraded

to a 21" inch job that was so huge, that if you didn't stick it in

a corner, it took up the whole desk. It was flat-panel, though and

full of pixels. It cost me around $1100 at the time.

LCD

Then we switched over to LCD displays. First, they were all 4:3,

but later most became 16:9. Still, the resolutions were slowly but

steadily going up. I figured it would be only a matter of time

before massive displays with super high DPI became available. Now

mind you, I don't want the super high DPI to fit more info, I want

super high DPI so I can get extra smooth text and screen elements.

Windows supports high DPI displays, we just don't really have

any.

If the DPI on screens were high enough, we wouldn't need

antialiasing or font smoothing or any of the other hacks that get

us around low DPI issues. Think about printers: once the DPI got

high enough, you could no longer tell there were individual dots

making up the letters. That happens at about 600dpi (or 1200dpi if

you're really paying attention). Heck, many folks look at 300dpi

and think that's smooth. Our screens? Mostly still stuck at

96dpi.

My own primary screen (until I pick up the 30 later this year)

is an older HP 2335 running at 1920x1200. It has a "TCO 03" sticker

on it, to give you an idea of its age.

Then comes HDTV, and a backslide on resolution and DPI

Now, suddenly, the market is all about displays that display

1920x1080 "1080p HD" resolution. Wait!! What happened to all those

wonderful pixels at the bottom? 120 pixels may not seem like much,

but it is when you work on software that needs every last inch of

your screen (development, design, video editing etc.). The brand

new display to the left of it is actually an inch larger and the

same resolution, so slightly lower real DPI.

At the rate we were going for a while, we should have had twice

or three times the DPI on a 24 or 23 inch screen. But nooo.

Now all the panel manufacturers are hitting the consumer sweet

spot of HDTV resolution. There's more money to be made in TV than

in supporting alpha geeks with super high res displays. The highest

resolution I've seen is on a 30" display, and they still cost a

buttload because of the lack of competition. ($1200 to $1800 each).

Even the laptop manufacturers are backsliding and going to these

piddly netbook-class panel resolutions.

Average DPI is going down because TVs sell based largely on

size. You end up with a 60" screen running at the same resolution

as new 23" computer displays. They have big fat pixels the size of

thumbtacks (ok, not quite that large).

We know we can handle higher DPI panels. Look at laptops running

at the same 1920x1200 resolution on 15". Heck, look at phones doing

800x480 on a 4.1" OLED display. We have the technology. It

can be done.

Will OLED change things?

I understand that OLED is both easier and more efficient to

manufacture. Hey, and they're more power efficient especially due

to reduced backlighting requirements. [Correction:

eliminated backlighting requirements, not reduced]

With mobile devices moving to OLED, will we see them on our

desktops? If they're easier to manufacture and don't suffer from

the same rate of dead pixel problems, will that make the panel

companies branch out into much larger displays? Or will they make

them bigger, but at the same sucky 1920x1080 resolution?

Since OLED is done with plastics, can I just get one big honkin'

panoramic display that wraps around me at my desk? Or am I going to

have to wait for some new HD++ resolution to hit TV-land…or

worse…have a 3d display on my PC?

I want my pixels. I want my DPI, and I want it in the

form of a big honking display for my computer.

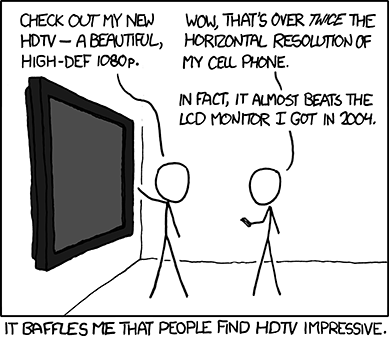

Update 2010-04-26: Here's a very timely XKCD on

this very topic: